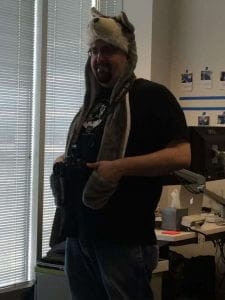

This is Mark. He challenges me. He confronts me. He inspires me. I think he is amazing. Mark doesn’t do things because someone like me who claims to know a bit about agile says so. He looks at the world from every perspective. I love this about him because he forces me to think deeper than I’ve ever had to in many areas. He stretches me as a coach and forces me to keep growing.

This is Mark. He challenges me. He confronts me. He inspires me. I think he is amazing. Mark doesn’t do things because someone like me who claims to know a bit about agile says so. He looks at the world from every perspective. I love this about him because he forces me to think deeper than I’ve ever had to in many areas. He stretches me as a coach and forces me to keep growing.

Definition of Done was an area where Mark really inspired me to dig deep. This was his concern …

“I hear and see people teaching Definition of Done like it is a checklist of all the things a team has to do in order for a user story to be done. I think this undermines ownership of quality code by the team because the checklist becomes a crutch and an excuse to be mediocre. It causes people to say things like, ‘Well it meets the definition of done,’ when they know that the code still isn’t as good as it could be. It develops the attitude of, ‘Oh well, it wasn’t on the list so I don’t have to do it.’”

And you know what? I have witnessed the same behaviors so I had to agree with him – he was right. Of course, while I agree that this poor behavior is often displayed, it was never the intention the creators of scrum had in mind. It is definitely not the attitude that agile teams should employ.

After multiple conversations about the intent behind DoD and how it can be used for good and not evil, it was with a bit of hesitation Mark said, “I’ll trust you.” This beautiful confrontation sent me on a mission to prove that there was a way to help teams create a Definition of Done that promoted a healthy value system and attitude about quality while still serving the purpose of having a clear working agreement about what it means to be done.

I joined with a few teams and we did collaborative sessions where we explored the attitude and value system we want to employ when developing software. We discussed the practices that we use that supports those values and mindsets. Finally, after we had fleshed all that out we talked about the minimum items we required of ourselves on every piece of code we presented to the Product Owner and asked them to accept the work into their product. We determined that we would consistently apply the mindset around quality we set forth in our DoD and employ the practices everywhere they were applicable when creating software.

Mark somehow got hold of an unfinished draft of the DoD for one of the teams today and sent me one of the best compliments an agile coach can receive. He said, “That’s one of the best DoD’s I’ve seen… you could change my mind about them if that’s the idea we push.”

I am a better coach because he didn’t just give in to industry best practices. The teams developing our code are better because they had to dig deep and determine the value system they wanted to work from. And the product will be better because the quality standards are being considered in grooming, planning, and development. Teams are growing and code is getting better and it’s all because one person stood up and was willing to take a stand for his beliefs. Great job, Mark!

Just in case you’re interested…here’s a bit of the DoD two teams who share one code base came up with:

In our quest to deliver quality software…

We will strive to understand our customers and partner with them to build the right solution that they perceive as valuable to meet their needs. Some of the practices we will use to ensure value are:

- Creating frequent feedback loops with stakeholders and customers

- Delivering incremental value and iterating on feedback received to increase value delivery over time

- Building in ways to measure trailing indicators of actual value delivery

We will create software that is performant and measurable. Some of the practices we will use to ensure performance are:

- Performance Testing

- Performance Monitoring by gathering metrics before and after introducing new code into production

- Creating and Adhering to SLAs

- Building in the ability to measure performance over time

We will create software that is stable. Some of the practices we will use to ensure stability are:

- Refactoring

- Monitoring

- Using Heuristics to build relevant tests

- Health checks for less watched systems

- Never making changes to code or configurations in production

We will create software that is maintainable. Some of the practices we will use to ensure maintainability are:

- Completing code reviews that include feedback

- Reducing the introduction of technical debt

- Making refactoring and architecture a priority and helping the business to understand the value it adds to the product

We will create software that is free from critical defects. Some of the practices we will use to reduce the introduction of escape defects are:

- Creating automated tests for major or critical features and core functionality

- Creating test plans that are repeatable by anyone

- Developer testing in the development/feature branch

We will create software that is self incriminating. Some of the practices we will use to ensure our software is self-incriminating are:

- Automated testing when appropriate

- Features have automated tests written prior to acceptance

- Monitoring

- Logging

We will create software that is extensible, scalable, and flexible. Some of the practices we will use to ensure extensibility, scalability, and flexibility are:

- Creating software that is readable through depending on clearly written code rather than comments

- Creating software that is repeatable

- Creating software that is reusable

- Automating whenever possible

- Developing consistency in our coding and architectural practices and standards

- Developing consistency in our deployment, testing, standards

- By reducing the dependence on tribal knowledge when it makes sense to do so.

- Automating deployments to production

We commit to employ these practices as the way we create quality software and employ this value system in every piece of code we develop. At a minimum, we will ensure that the following practices are adhered to prior to considering work Done and presenting it to the Product Owner and asking them to accept it into their product.

- [List of specific minimum requirements that span all types of user stories]

Further, after the Product Owner accepts our work into their product we will ensure that the following practices are adhered to before we will consider a user story Done Done.

- [List of specific minimum requirements that are needed to move/validate code in prod]

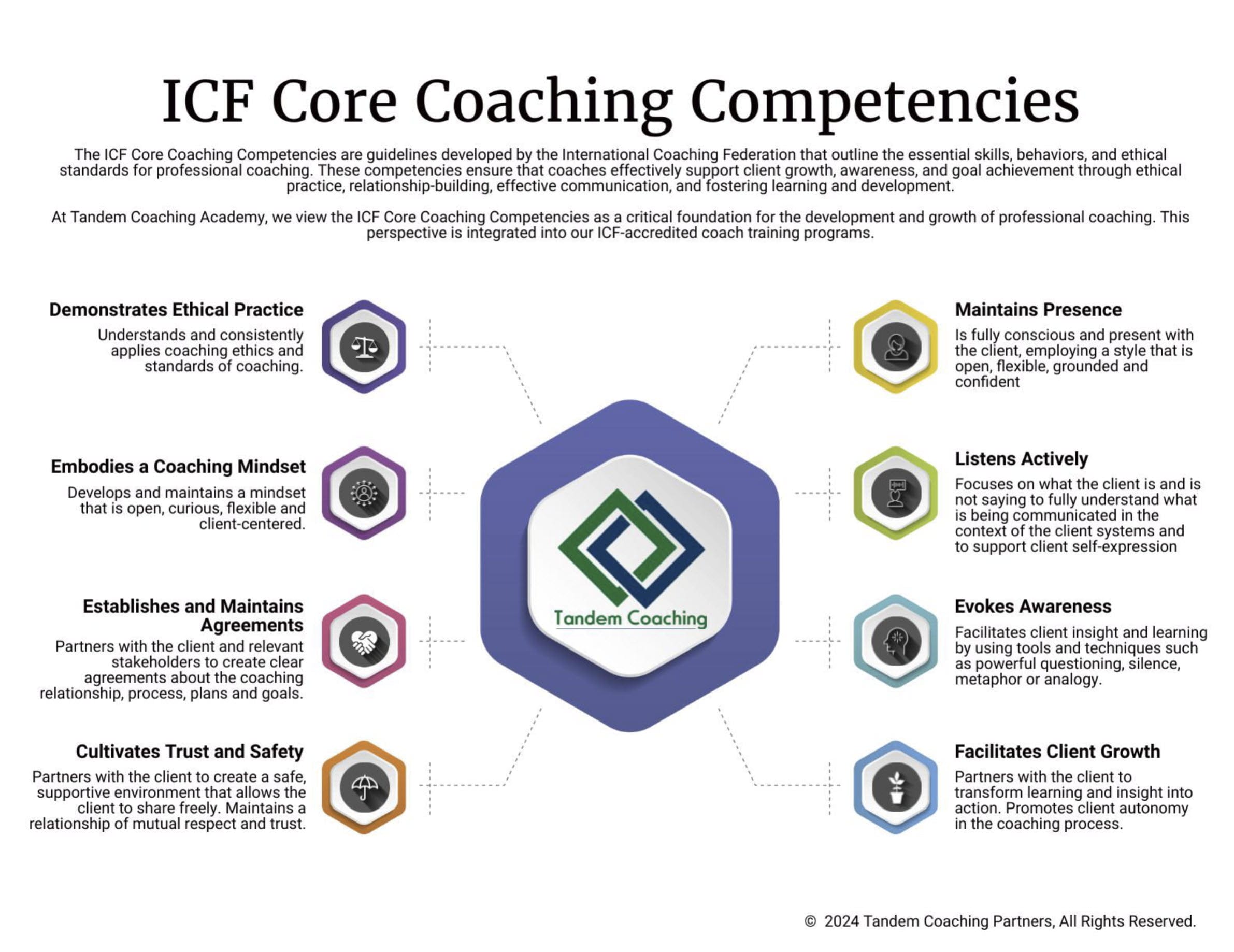

Unlock Your Coaching Potential with Tandem!

Dive into the essence of effective coaching with our exclusive brochure, meticulously crafted to help you master the ICF Core Coaching Competencies.

"*" indicates required fields

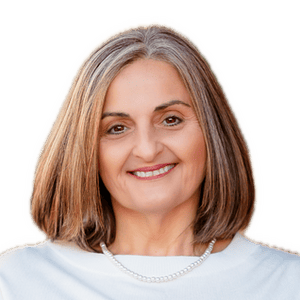

About the Author

Cherie Silas, MCC

She has over 20 years of experience as a corporate leader and uses that background to partner with business executives and their leadership teams to identify and solve their most challenging people, process, and business problems in measurable ways.